The Golden Age of Structural Biology

In 1953, Francis Crick and James Watson deciphered the double helix structure of DNA. That moment marked the birth of structural biology, based on the idea that the shape of molecules determines their properties and how they behave inside living organisms.

Back then, there were no computers that could model molecular shapes and calculate the movement and physical properties of these molecules. Several decades later, the first realistic simulations of materials and biomolecules could be built in mainframes, using tools like molecular dynamics (MD) simulations. In MD, quantum particles are approximated as classical particles subject to force fields.

The calculations necessary to derive molecular behavior from fundamental physical laws, like the Schrödinger equation, are complex and impractical for very large numbers of particles. Becasue of this, methods which rely on physical laws (also called ab initio) are hard to scale and constrained by the availability of computing capacity. This is the case for biological systems, which have very large and complex structures interacting with each other. For example, in the case of proteins, the fundamental structural and functional building blocks of cells, which can have thousands of amino acids and tens of thousands of atoms.

In 2020, AlphaFold marked a drastic advance in molecular simulation. AlphaFold is a deep learning system which approximates protein shapes. It leverages a large library of structures discovered experimentally over the course of several decades, which are available at the Protein Data Bank. The Alphafold team computed the structure of essentially every protein in humans and multiple other species.

Previously, protein structures were discovered experimentally using X-Ray crystallography, nuclear magnetic resonance or electron microscopy. A process that requires months or years of work. This new method effectively helped the scientific community save decades of effort.

The application of AI to protein folding was lauded as the 2021 scientific breakthrough of the year by Science magazine. However, the most significant contribution of AlphaFold in the long term may not be the protein structures themselves, but bringing to the forefront of scientific process the ideas of approximate physics. At its core AlphaFold has no knowledge of Schrödinger equations, classical mechanics, physics or biology. It simply extracts insights from a library of geometric shapes and applies that insight to new shapes. It only understands statistical regularity, just like other machine learning systems.

The methods of approximate physics are such a radical departure from traditional scientific thought that it’s worth looking at an example in more detail. Imagine an experiment in which we drop a solid ball from a particular height, and we want to predict when it will touch the ground. One approach to answer this question would be to use the law of gravity to predict the acceleration on the ball, and given the height from which it’s dropped, calculate the time it would take to reach the ground.

Another approach would be to throw several thousands balls of similar shapes, weights and material composition, and then build a statistical model which takes the features of the original ball into account and gives an approximate answer. This solution could be even more precise than the first one, because it takes into account air friction and other physical realities that our simplistic ab initio model may have overlooked.

On a practical basis the second model could be strictly better. It could be faster to calculate, and is probably more accurate for the use cases that we care about. But here is the key difference, it gives no insight at all about the reason why the phenomena is the way it is. It has no knowledge of gravity, friction, pressure, air turbulence, or any other physical feature of the experiment. In a way, the second method finds a shortcut through physical knowledge and computational models to give us an answer, which can be very precise, but is based on statistical data, rather than theoretical knowledge.

Of course, everything in the natural sciences is approximate. Physical laws are approximations to material reality. The equations that we use to describe these laws often include approximations in their deduction. Then, these equations are typically approximated numerically, and the computers that we use for those numerical calculations make approximations as well given their limited precission. But there is an overarching attempt to stay as true to reality as possible (often with provable error bands). Not here. Here we are throwing that out of the window and saying that, if it ends up close enough to the end result that we want, we don’t care where it came from.

A few important questions come up: are there cases in which the statistical approach breaks down? When can we be reasonably certain that our answer is close to the reality indicated by experiments? When is it too far to be of practical use? When can we use statistical methods to derive theoretical truths? We don’t know yet.

Despite of the lack of guarantees, the power of this method is so significant that there has been a cambrian explosion of research using it. To a point that tasks which seemed impossible within structural biology just a couple of years ago can now be achieved easily. And this has spawned a tremendous amount of research extending these methods across structural biology. A golden age.

A few highlights:

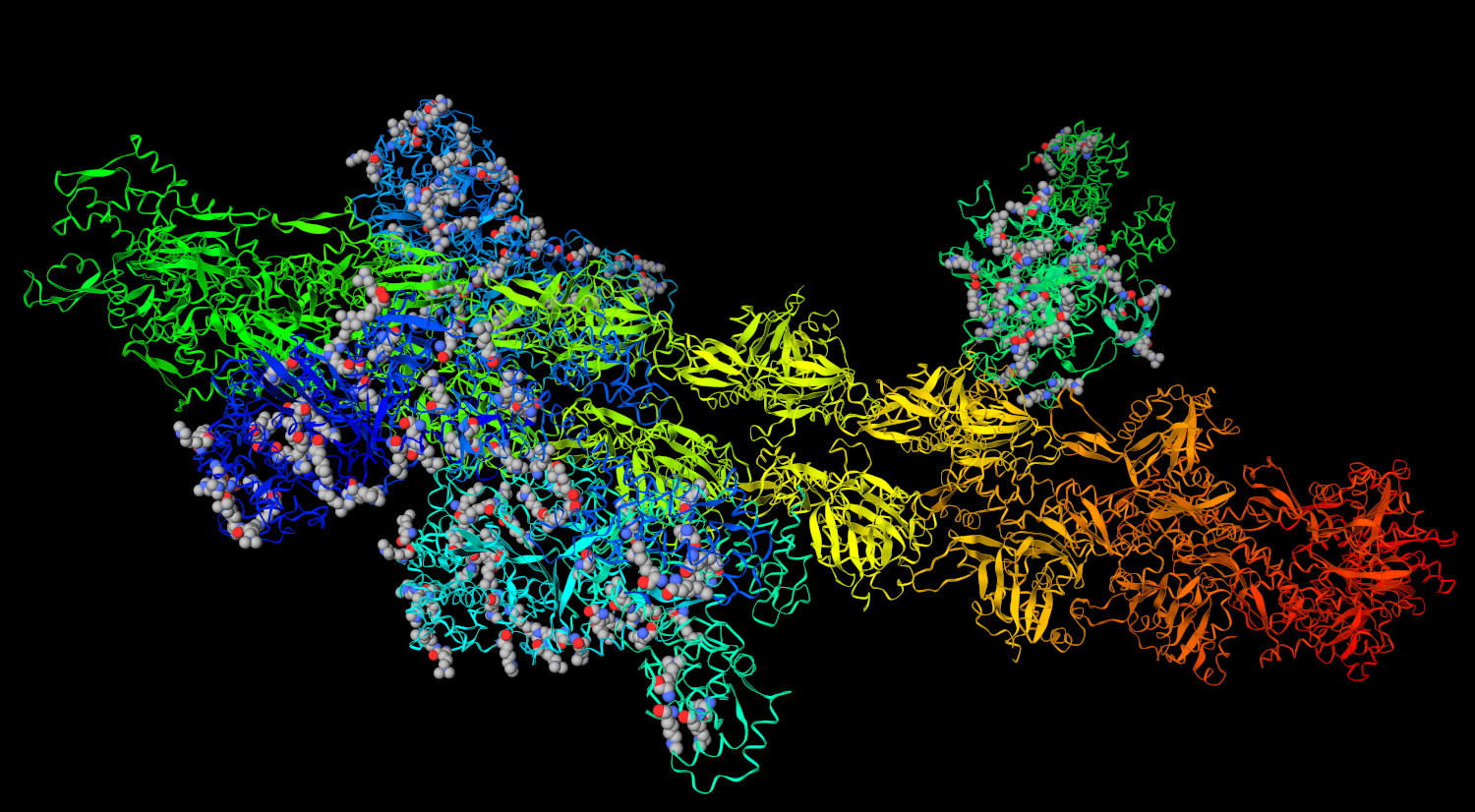

- Estimating protein-protein interactions, or for example this using a different approach. Proteins perform useful functions mostly as they interact with other molecules, such as other proteins. Being able to predict these interactions when they are experimentally unknown can be useful to understand the inner workings of biological systems and to develop therapeutic strategies.

- Predicting protein-peptide interactions and also this, as a special case of the general problem of predicting interactions of proteins with other molecules.

- Predicting interactions of specific groups of proteins, like those found in the immune system, such as antibodies or T-cell receptors in the human immune system.

- Determinating the structure of protein complexes. Protein complexes are specially important examples of protein-protein interactions. Many proteins have to be surrounded by other proteins in order to perform their useful function. There are many other approaches, such as this. A variety of complexes have been studied, such as complexes in the human mitochondria, complexes in the nuclear pores, complexes in the cell membrane. And even large scale efforts across many different types of complexes across all eukaryotes.

- Current deep learning systems are trained on structures determined experimentally. This makes it hard to predict structures that are slightly different from reference models. For example, proteins in which the corresponding gene has a mutation, as in the case of genetic diseases, or cancer. This makes it harder to use these models to evaluate the effect of mutations in protein function.

- After proteins assemble they go through a series of modifications that affect their structure and function in living organisms. As a result, even after predicting their tertiary structure it may be necessary to study post translational modifications.

- There are also a whole host of areas of research that don’t involve proteins at all. Some of these ideas can be used for RNA, to determine the structure of RNA

- Machine learning can also be applied to the molecular simulation techniques themselves. For example, to make make molecular dynamics faster or to make molecular dynamics scale

- Another example is to approximate the Schrödinger equation, or make the DFT approximation more precise, tackling the fractal electron problem

- We have also seen many improvements to make the technology more available or easier to use, like simply reimplementing it in PyTorch

- Many of these methods involve estimating the structure of a molecule under study. And then the properties that can be derived from this structure. But we can also look at other representations of molecular structures, such as string representations of molecules, which can be used within machine learning models. And then we can predict molecular properties directly from language models or predict molecular properties from graph models using a graph representation of a molecule, which could be used within a graph neural network for example.

- One specially promising category of research is the combination of computational and experimental techniques to overcome the limitations of our physical hardware. For example using machine learning to interpret and process data coming from optical microscopes, such as this, or to find cell organelles in electron microscopy images and to identify individual molecules in electron tomography and also in X-ray crystallography images.

- Last, but not least, one potentially revolutionary application is the design of new molecules. See for example this, this, this, or this. But in general these techniques are still unproven.

Approximate physics doesn’t give us any insight about the underlying laws of nature. But it enables us to do things that were very hard before. Because of this, it’s easy to underestimate how transformational it can be. It reminds me of the radical reduction in the cost of gene sequencing, from hundreds of millions to hundreds of dollars.

To conclude, a bit of speculation. How will this research evolve in the next few years? Here are my predictions:

- In spite of these technologies, we will not see significant development in personalized drug development. These methods rely on large datasets. Personalized approaches by definition are datasets of one. Personalized medicine will have to find other tools.

- It is too early for generative methods. Generative methods have achieved remarkable results in image and sound generation. But these methods rely as well on massive datasets grown for many years. It’s also very easy to evaluate the end result of an image generator (does an image look like a face or not?). It’s much harder to evaluate whether a specific compound is useful for its intended target.

- Computational and experimental methods will be combined. Cryo-ET data will be cleaned up and processed through machine learning algorithms to understand molecule location and behavior inside living cells. This will help discover novel pathways. The evolution of microscopes will follow mobile photography. After approaching the limits of physical lenses, phones had to rely increasingly on computational techniques to improve image quality.

- We will be able to predict the structure of many different types of molecules, and to easily calculate more types of molecule-molecule interactions, including cell and nucleus membrane lipids and metabolic pathways and the structure of many different types of DNA and RNA inside cells. All known molecules, complexes and pathways in human cells will be individually modelled

- Simulations will get faster and bigger. Efficiency improvements will let us run simulations several orders of magnitude larger than anything tried before, up to billions of atoms. We will also be able to simulate for longer periods of time, which will open up the door to simulating entire pathways, condensates, organelles and cell functions. Over time, we will be able to create atomic simulations of entire cells.

Do you agree? Disagree? Did you write a paper I should have cited? If you are interested in large scale simulation for structural biology reach out to me on Twitter or LinkedIn.